Beyond Turing: Has the Turing Test become obsolete with genAI and frontier tech?

Beyond The Turing Test

“The imitation game” movie successfully framed Alan Turing’s ideas as a compelling story, bringing the idea of automated reasoning into the broad public. Indeed, Turing was among the fathers of computer-based problem solving – what later become, after a witty marketing naming, “artificial intelligence”. However, Turing mostly focused on the ability of machines to merely reproduce human-like outputs: the game of “imitation”. This idea, that machines could be one day judged based on their ability to imitate human speech, text or other intellectual outputs, developed into the so-called Turing test.

Briefly speaking, the Turing test is a criterion to evaluate the capabilities of computing machines. In the test, a human evaluator judges the text produced during a conversation between a human and a machine. The evaluator has the task of identifying the machine; the machine succeeds if the evaluator cannot reliably tell it apart from the human. The results are not dependent on whether the answers are correct, but only on how closely they resemble those of a human.

Due to the recent skyrocketing of generative AI and large language models (LLMs), the Turing test has been aptly evoked by marketers and journalists to claim that “this LLM has successfully passed it” or “this other one is just about to breach the test”. Often, such claims are accompanied by likewise boastful phrases such as “we are getting closer to artificial general intelligence (AGI)”. However, as marketing claims often are, these ones overlook two key aspects of the Turing test: first, it merely involves imitation; second, that it is no longer the golden standard for the evaluation of automated intelligence.

Imitating Imitation?

Imitating means reproducing something that, to a third-party observer, resembles an original work.

A classical rebuttal argument is that of the Chinese Room (article by Katja Rausch on John Searle’s Chinese Room), which challenges the original Turing test apart from AGI. In a nutshell, think of a tourist who never learnt Chinese. However, he or she is provided with a big book, containing common phrases and answers. When the tourist is in need for something (going to a certain location, or maybe to the restroom), he or she draws from the book and interacts with the locals, asks something coherent, receives a set of sounds, looks upon the book to find their meaning, and keeps picking tenses so as to reach the location. Is this having learnt Chinese? No, it is just picking coherent sets of words which lead somewhere. However, the whole scope of such books is to help tourists imitate the way locals speak, in order to ask for directions. Current LLMs are understood in terms of very powerful machines that create sequences of words that, with very high probability (meaning, over thousands of pre-written texts), bear some sense if chained together: “You eat apples” is more probable than “apples eat you”.

A second argument regarding the Turing test and AGI is that not only we consider something as intelligent if it acts similarly to a human; we also want that the content of such act is correct. This is intrinsic in the original formulation by Turing – who, in fact, never spoke of “artificial intelligence” of sort. As an example, when asked to write scientific articles, LLMs still make up un-existent references; sure, the text may look legit, but then the content is plain wrong. Not to talk about disinformation (link to the Researcher’s Corner with an interview by Daniele Proverbio and Jindrich Oukropec on disinformation).

Finally, there is a question of who is the evaluator in the Turing test. Is it a person whose job is searching for AGI, and thus may see it everywhere (similarly to Holy Inquisition, that found witches every five women), or a skeptics, or a neutral judge (what is even neutral), or a panel? And over how many conversations should they judge? After reading several of them, they may start to find patterns in machine responses (e.g. the use of keywords such as “bolstering”, “delving” and others, or the way tenses are constructed; see some examples here): is learning allowed to evaluators?

These issues, together with several others, have long left the Turing test as an important step towards the development of automated problem-solving algorithms – but something already surpassed.

ARC, a recent alternative

Going beyond the Turing test has been the focus of many academic publications for more than twenty years [1, 2, 3]. The suggestions span various degrees of changes, and they haven’t really hit the popular discourse. One recent alternative, however, is gaining traction, at least among computer scientists. It is one alternative worth mentioning, as it may become a solid benchmark in the next years.

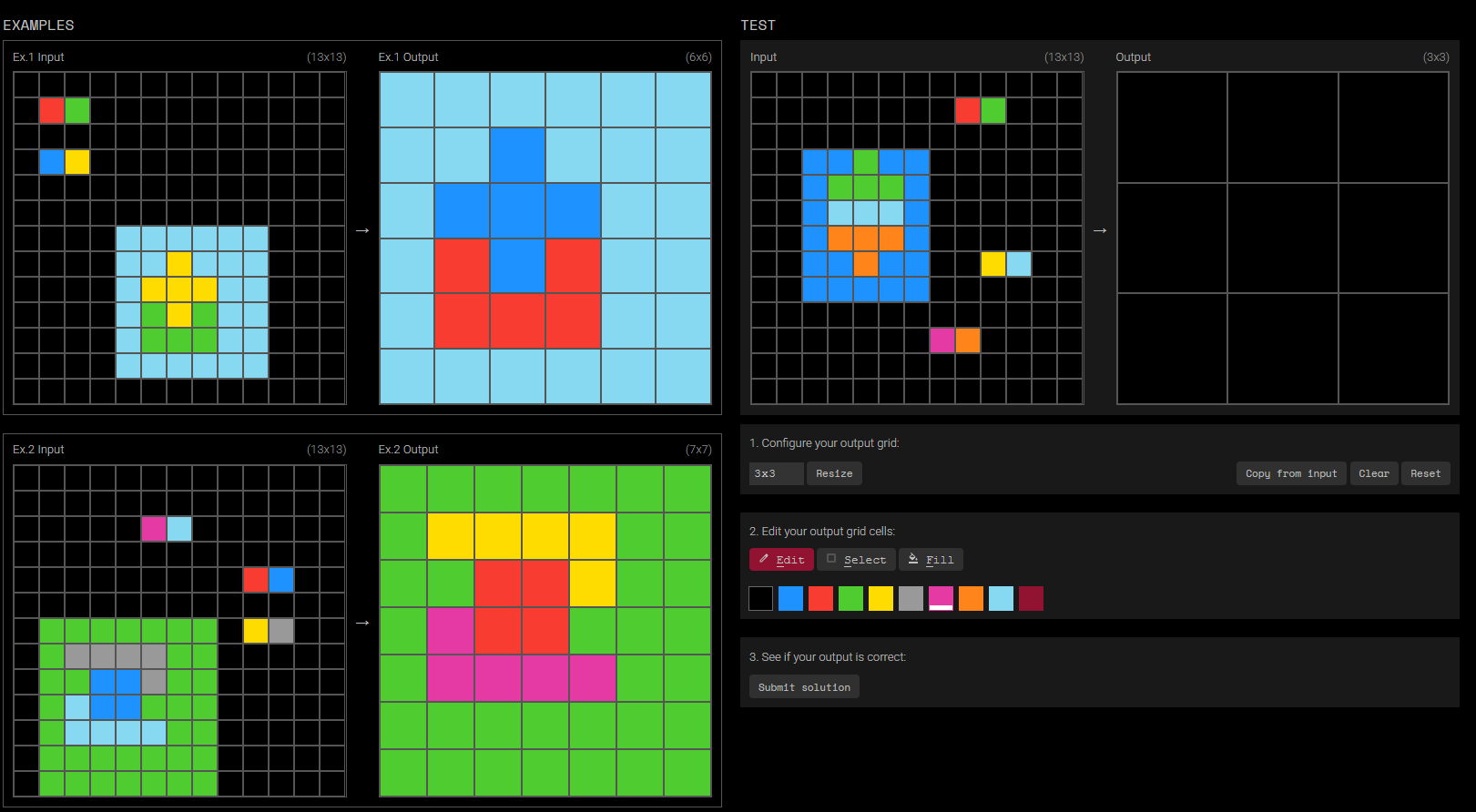

Motivated by the challenges to imitation-based tests, solely useful to evaluate the skills in problem solving, the ACR (Abstraction and Reasoning Corpus) benchmark has been recently developed to stress-test the capabilities of AI models, and to challenge them into embedding reasoning capacities that are proper of humans and that current AI systems clearly lack. The benchmark is not a single test, but rather a battery of short puzzles that are similar in spirit to the IQ tests. These are developed by human experts, are based on very few examples (data) and require the ability to “reason”, i.e., to abstract and compose rules and moves. The ARC benchmark does not aim to be a way of recognizing intelligence, but rather to be a fair methodology to compare intelligent agents.

A test case is given below. On the left, there are two examples: the left grid should be transformed into the right grid, according to some rule we need to uncover. On the right-hand side of the figure, we have the text. Given the input grid, what should be the output?

Over a battery of tests like this, skilled humans can complete about 100% of the puzzles. State-of-the-art LLMs (we are talking of the latest GPT, Gemini… versions) only less than 3%. They fail miserably, in tasks where imitation is not sufficient anymore, but abstraction of compositional rules is required.

Based on this benchmark, the ARC-AGI challenge has been established, calling developers worldwide to disrupt the current AI solutions. If something could beat ARC, that would be a giant leap which would disrupt AI as we know it today – and potentially bringing the whole billion-dollar industry to revolution. If you think that it all started from challenging overstating claims, about algorithms being “intelligent” on the sole basis of imitating some simple task, the whole challenge sounds pretty intense!

A marketing claim?

“Passing the Turing test” is basically a marketing claim that, as of today, have historical but little practical meaning. Going beyond its limitations is already a research endeavor that should not be overlooked, and has brought to the development of challenging benchmarks that may revolutionize the AI industry. Likely, such revolution won’t happen very soon – but it may, challenging cultural prejudices and billions of investments. Going beyond simple claims is always worth the time and effort.

Bibliography

[1] Marcus, Gary, Francesca Rossi, and Manuela Veloso. “Beyond the turing test.” Ai Magazine 37.1 (2016): 3-4.

[2] Hernandez-Orallo, Jose. “Beyond the Turing test.” Journal of Logic, Language and Information 9 (2000): 447-466.

[3] Hernández-Orallo, José. “Twenty years beyond the Turing test: moving beyond the human judges too.” Minds and Machines 30.4 (2020): 533-562.

[4] Chollet, François. “On the measure of intelligence.” arXiv preprint arXiv:1911.01547 (2019).

=> Ethicist BIOMEDECINE and COMPLEX SYSTEMES Daniele Proverbio holds a PhD in computational sciences for systems biomedicine and complex systems as well as a MBA from Collège des Ingénieurs. He is currently affiliated with the University of Trento and follows scientific and applied mutidisciplinary projects focused on complex systems and AI. Daniele is the co-author of Swarm Ethics™ with Katja Rausch. He is a science divulger and a life enthusiast.

- Daniele Proverbio, PhD

- Daniele Proverbio, PhD