“Machine Intelligence”: What if, in 1955, Minsky, McCarthy, Shannon and Rochester had named “Artificial Intelligence” differently?

Language is key. Language makes us communicate. We give meaning to words and words shape our reality. Shape us.

Our reality on Artificial Intelligence is blurred. Our view is foggy. People are confused. Numerous debates evolve around “trust”, “fear”, “confusion”…

One basic question should be asked. And if a biased wording is partly the cause of the technical, social and ethical bewilderment we feel to be in right now?

Scientists, thinkers, philosophers, economists, Nobel Prize winners all witness our questioning about the direction of AI, about its core values, about its purpose.

The late EU AI proposal is yet another proof to the urgency that something might get out of hand. It is an act of reassurance to the public. An act of clarification towards professionals.

An excercise in explainablility by itself.

Building a nomenclature, creating categories, separating species, the dangerous ones from the less dangerous ones, the fine ones against the voracious ones.

In order to preserve another species, preserving us. Like Darwin, like Audubon, it is a naturalist action on technology, called humanizing AI.

If… then

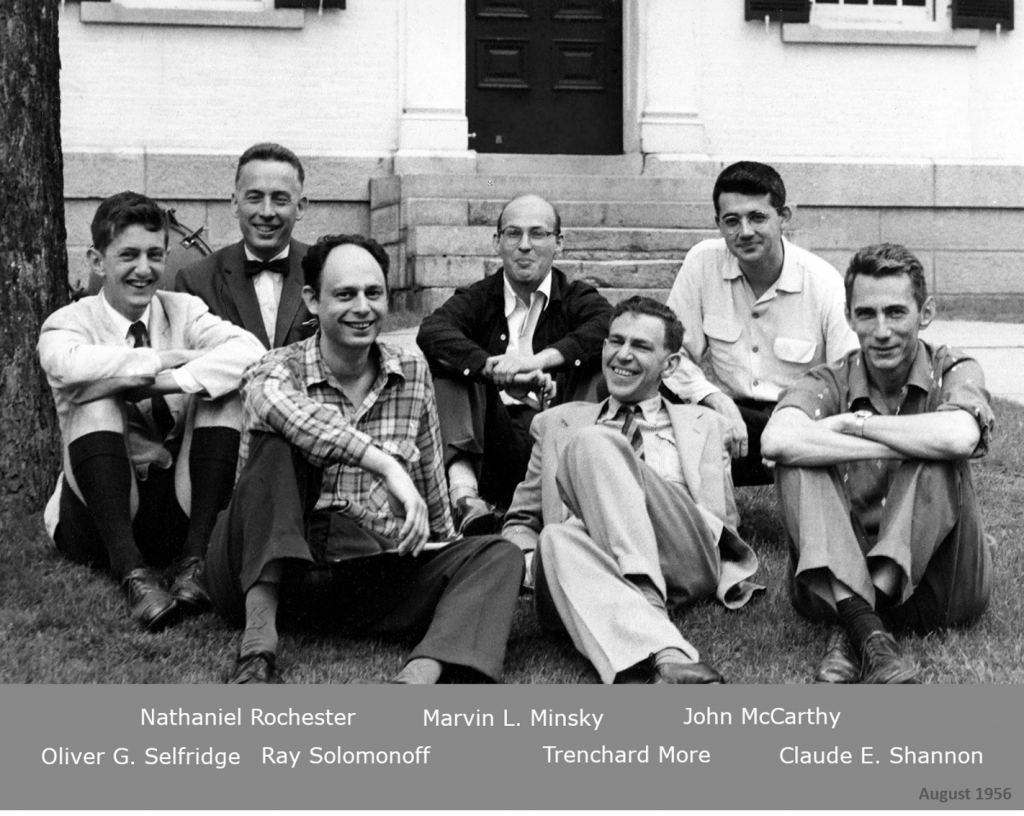

If Minsky, McCarthy, Shannon, and Rochester had not specifically used the terms «articifical intelligence» in their 1956 pitch to get funding for a workshop at Dartmouth, then…

If “artificial intelligence” were called “machine intelligence” (we now use “Machine” Learing), would there be the same fear, philosophical dilemmas, cognitive meandering, latencies in our legal or ethical frameworks?

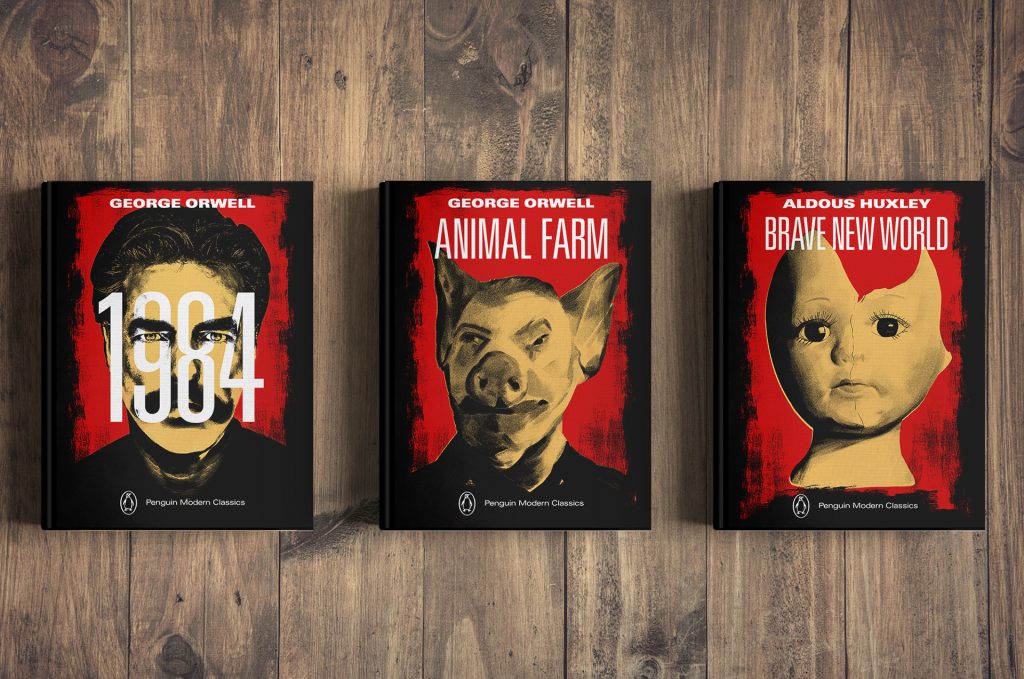

In literature, science-fiction author Karel Čapek coined the word “Robot” in 1920. He was the first to really address the conflicts of human-like machines, that mean us good but eventually start to go foul. It was a story like many other ficticious constructions that have been around for thousands of years. Legends, myths, folk stories originated with humanity.

In 1950, Asimov published “I, robot”. Again, the machine-human issues became key. Two worlds met. But did not merge yet.

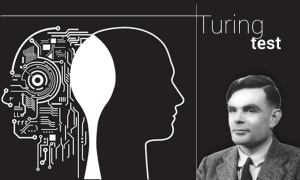

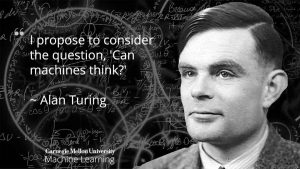

The same year, Turing published his now famous paper “Computing Machinery and Intelligence” and openly posed the question “Can machines think?”.

His words were not alarming by themselves. Rather a playground for computer specialists. His questionings were clearly set in a “computing machinery” world.

And the question of intelligence was a functional one. Especially since it nearly coincided with Shannon’s Information theory “A mathematical theory of Communication” (1948) (binary coding for the digitizing of information).

Collision of two semantic worlds “artificial”and “intelligence”

Only when these two semantic universes collided in 1955, by the use of “intelligence” clearly a human feature, and the adjective “artificial”, linked to the world of artefacts, non-natural creations, the ball began to roll.

“Artificial intelligence” was used within a pitch, a call-for-action for the funding of a research project.

One can easily understand that in such a context the wording is more sophisticated, more eye-catching, more promising in order to get the hoped buy-in.

The notion “artificial intelligence” as a juxtaposition of seemingly paradoxical words, “intelligence” flanked by an oxymoron “artificial”, resulted in a neologism, might be considered as an rethorical act.

The use of two figures of speech might allow such an hypothesis.

In addition, by using abstract terms such as intelligence and artificial, the authors diliberately pointed to interpretative, cognitive science, thus lifting the restrictive and limiting character of the quantitative “computing” issue.

Nowadays it progessively has morphed into into a philosophical questioning about our future, the future of mankind. Was this the pioneers’ intention?

Our survival as humans. Our core values.

The cognitive sliding happened in conjunction with linguistic changes. There are three subsequent steps – like a small innocent algorithm.

Was the data biased, right from the start? Was the data “mislabeled” to “artificial” instead of “machine”?

1. Opposing words/worlds

Turing’s major question “Can machines think?” is merely opposing two semantic universes to create thought and reflexion.

In “Computing Machinery and Intelligence”, he creates a dialog between two different worlds using different but still separate linguistic universes.

The opposition is clear : There is a binary world of 0 and 1. And there is the machinery world. The question being, can the machinery world go beyond its limited procedural functions?

The opposition is clear : There is a binary world of 0 and 1. And there is the machinery world. The question being, can the machinery world go beyond its limited procedural functions?

The supremacy of human thinking still was in its place. But it was questioned.

2. Mixing words/worlds

“Artificial Intelligence” by Minsky and his fellows plays on two important levels. The linguistic mingling starts.

First, “artificial intelligence” is a given fact, a statement. There is no question mark. In contrary to Turing’s question, which was a hypothetical scenario. I don’t believe that Turing meant it to be a rhetorical question, by reading his texts.

The mixing of the two worlds began. Who is who?

The mixing of the two worlds began. Who is who?

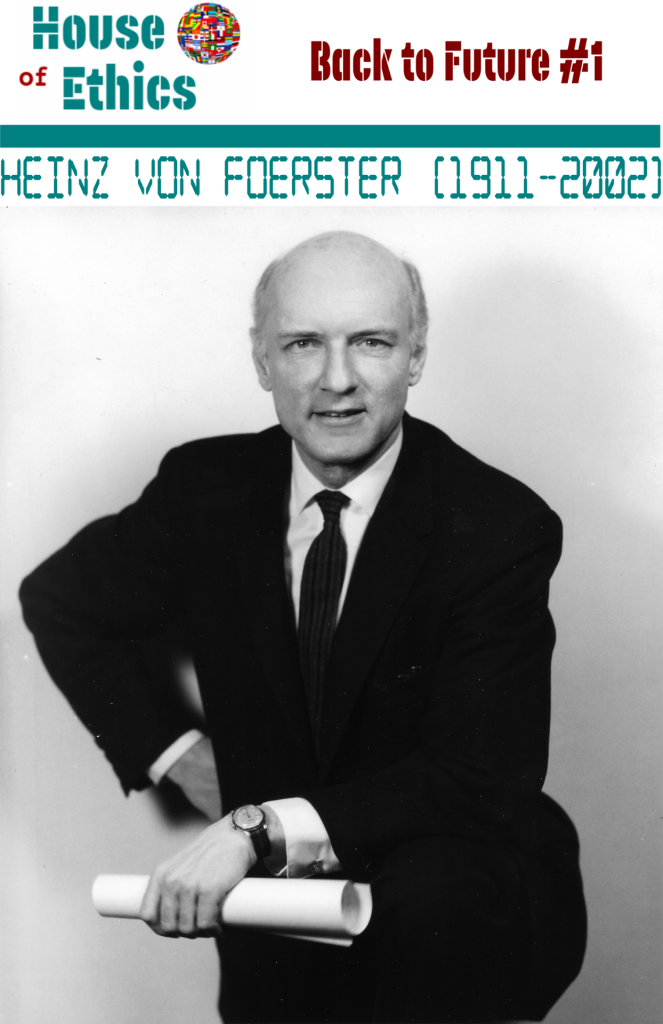

Theories like the trivial or non-trivial machines (von Foerster, ), computer brain metaphor (McCollough and Pitts), the malleability (Moor), opened wide the possibilities for crossing frontiers and interdisciplinarity.

Machines became computers and computers were linguistically more humanized, “anthropowordic”.

The supremacy of the mind over the computer was not a given fact any more. It was being questioned.

3. Merging of words/worlds

The “as” and the “and” are indicators of the merging of the two worlds. Nowadays, we do find it in most titles of the written press, academic research and conferences. Computers as humans, computer and human behavior,

The bestseller book Machines like me by Marc O’Connell, is just one popular example in literature. Westworld (HBO), Black Mirror (Netflix), Biohackers (Netflix), popular series. In the academic world the topic is ubiquitous. Can a computer become conscious over time? , Teaching Machines to think like humans with the result of “As artificial intelligence advances, humans behaving like machines will be a bigger problem than machines being human” by the Finnish researcher Aku Visala.

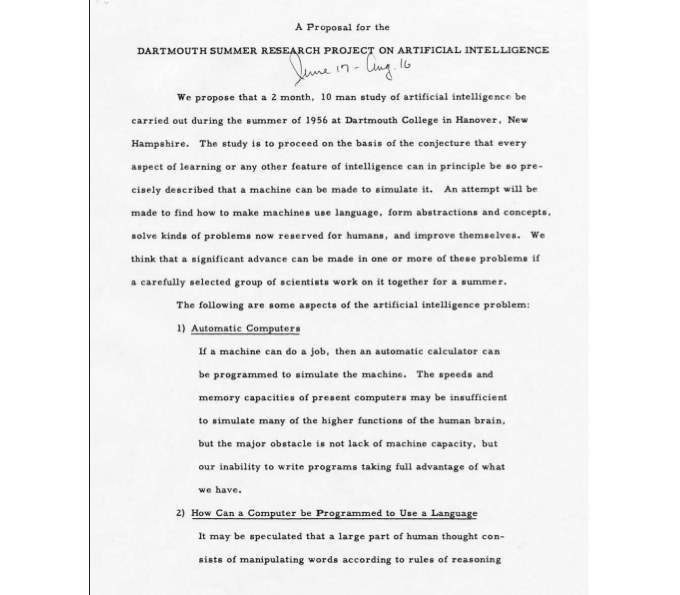

Excerpt of the Original Proposal on AI in 1955

A Proposal for the Dartmouth Summer Research Project on Artificial Intelligence

August 31, 1955

John McCarthy, Marvin L. Minsky, Nathaniel Rochester, and Claude E. Shannon

We propose that a 2 month, 10 man study of artificial intelligence be carried out during the summer of 1956 at Dartmouth College in Hanover, New Hampshire. The study is to proceed on the basis of the conjecture that every aspect of learning or any other feature of intelligence can in principle be so precisely described that a machine can be made to simulate it. An attempt will be made to find how to make machines use language, form abstractions and concepts, solve kinds of problems now reserved for humans, and improve themselves. We think that a significant advance can be made in one or more of these problems if a carefully selected group of scientists work on it together for a summer.

1. Automatic Computers

2. How Can a Computer be Programmed to Use a Language

3. Neuron Nets

4. Theory of the Size of a Calculation

Brain – Computer metaphor evolution

From & to to to as to else

The Computer & the Brain

From Computer to Brain

The Brain as a Computer

Cyborg Mind

Over-evaluating machines and under-estimating us

Years ago, in one of my Data Ethics classes I gave a very simple in-class assignment. Please answer 5 questions. One of the question was « What are your own personal values? »

The conditions were not to use a cell phone nor a computer. Ir was handwritten on papaer (great shock…). The instructions : “Answer with your own ideas and words.” I gave twice the time (to avoid a stressful climate) and told them that it would not be graded.

When I got the 200 answers back, 75 % of the students (Master level) copied the list of values form Wikipedia. Even though the use of the phone was « forbidden ».

We discussed it at length, and ultimately found out the they felt safer when checking on Google, and then eventually copying, believing Google to be more accurate and trustworthy than themselves.

For me it was like a wake-up call.

They were a clearly under-estimating their own capacity of thinking, of knowing. And clearly over-evaluationg, over-rating the computer.

For me, this was a major catalyst to work further on HMI, and the impact machines have on us. And the dubious use of linguistics.

Words forge reality, but who is in control?

If Minsky called their research project “Machine Intelligence” would there be such a bewilderment today?

Would people be so doubtful, scared and confused about their future?

Would the computing power still be considered as a competiting power to our human brain and be viewed as a potential risk to our “being human”?

Or would AI just and rightly be seen as an assisting tool to us humans, which we are in control of?

The question of control is crucial.

Who is controlling “it”?

The “it” being “what”?

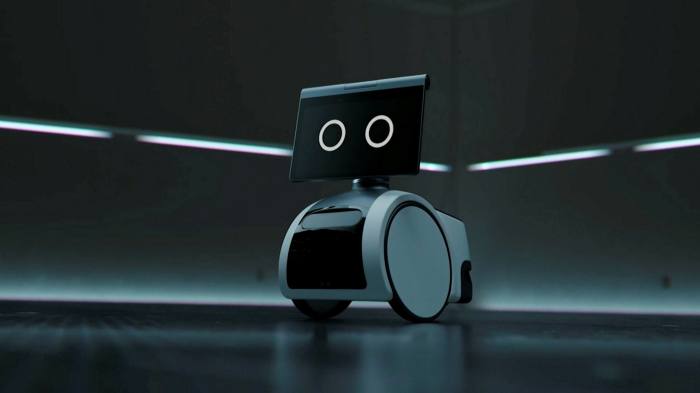

People don’t really know what AI is. People are confused. That’s why fictitious apocalyptic scenarios pop up like mushrooms. These narratives find resonance. Computers taking over, a swarm of robots eliminating us.

In the 1990, when the Internet was becoming popular, most people, even within the professional world, did not know how to use it.

Now we know better: Internet is a digital platform, a marketing/communication/knowledge sharing tool. A tool.

With AI, it is confusing. Is it a tool ? Is it an assistance? Is it an extension? An enhancement? Is it a substitute?

Maybe it is all of it? But not at the same time and not in any context.

Who controls it? Does it control us? Now people hear that machines are unpredictable (unsupervised ML or DL). That there is a “Black box”. That there is “dark data”.

Again a shady metaphor that does not help building trust and can be utterly misleading.

The term “Black box” was merely used and heared of in the context of plan crashes. Now it has become a reference in algorithmic opacity. This confusion adds to the fear. To the mistrust.

Cognitive shortcuts and wrong deductions

When people hear or read that AI is better than doctors in MIR (medical image recognition), they rarely hear, nor understand that it is about a computer program with a low-pattern recognition, a ML model that needs high computing power and only performs on scalability reached through big data. This needs to be explained.

Here we would need new metaphors.

What people understand is that AI is smarter, more intelligent than doctors. No! It certainly isn’t.

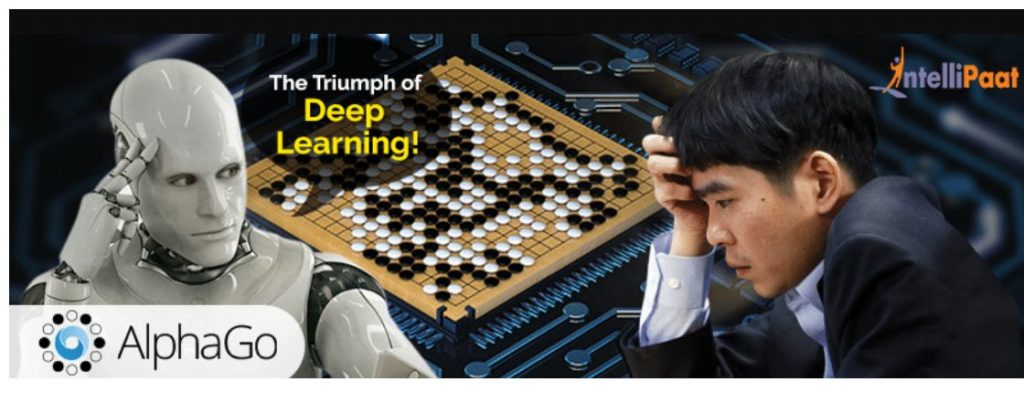

When people hear that AI won againt See Sedol in Go. Lee Sedol being the world champion, and Go one of the hardest thinking games. People understand that AI is smarter than us humans. Truth is that is just outperformed on one specific task, but certainly is not smarter than Lee Sedol. (Plus it loses way out on the netcarbon equation compared to Lee Sedol.)

Actually, AlphaGo never knew it won, never knew it was even playing Go, it just proccessed massive data in a minimal time.

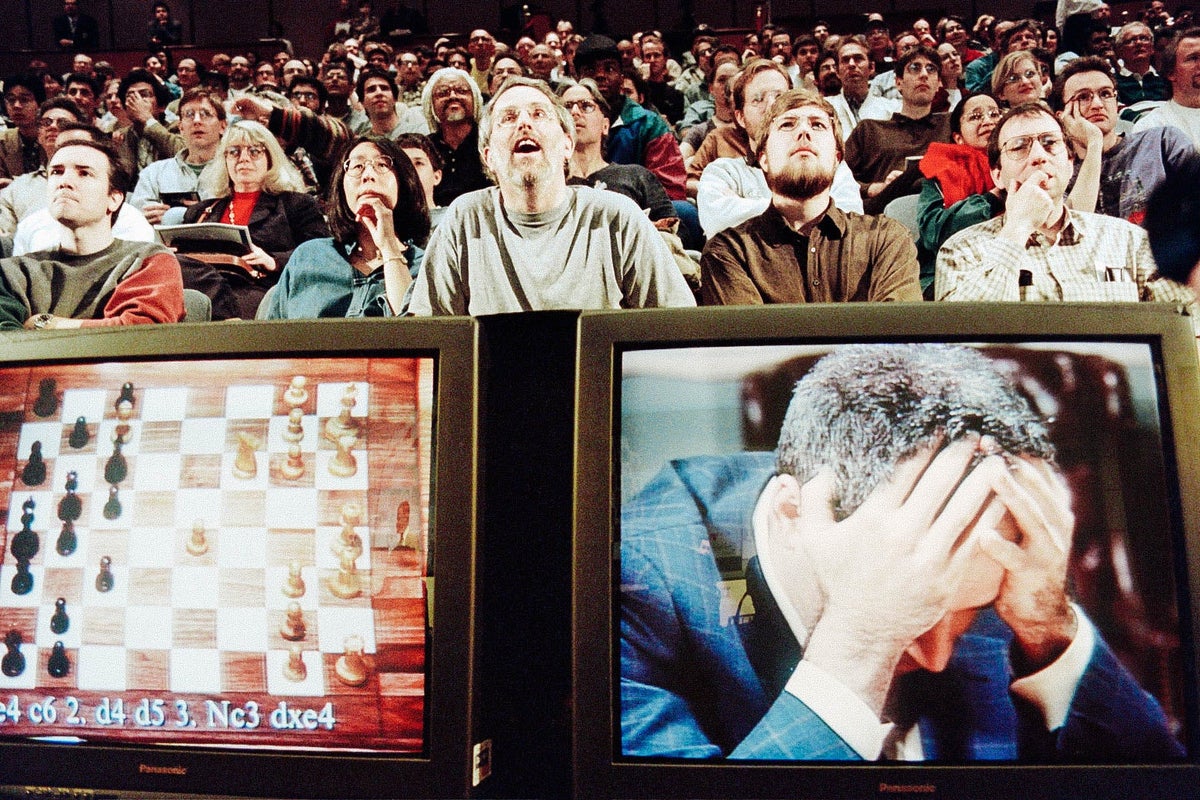

Just take a look at the picture taken the moment Big Blue by IBM won against Kasparov, in 1997.

The world was not prepared to it. The picture tells it all. The reactions in the public wre awe, shock, stupor; Kasparov himself, feeling humiliated, beaten, reacting like a loser. This picture went around the world. Pictures speak too.

What people hear, and clearly misunderstand is that AI is smarter than the smartest man in the world. And this narrative has been going on for nearly 25 years.

People hear AI is smart, smarter than us, smarter than the smartest and then suddenly… an autonomous car crashes, the security robot loses control and rolls in the fountain.

There is bewilderment. The same goes with crime prevention tools, facial recognition, where algorithms spit out biased and discriminatory results.

BIASED NARRATIVE THROUGH SUPERLATIVES

The “unstoppable” power of Deep Learning

The “Triumph” of Deep Learning

2014

Lee Sedol vs AlphaGo

Let’s for a moment imagine if ... "Artificial Intelligence" were called "Machine Intelligence" by Minsky

Let’s for a moment imagine that the wording used by Minsky were neutral, and not part of a project that needed funding. That he just paraphrased the words used in the proposal, merely technical computer-related terms.

Artificial Intelligence” vs “Machine Intelligence”

Joe Weizenbaum described in his book “Computer Power and Human Reason: From Judgment to Calculation” how academics, heads of various labs had to change their wording, and even their arguments within the paper itself to be electible for fundings. Mainly needed fundings by the Army, the US Department of Defense.

“A high-tech technology capable to spot flying birds and identify their feathers will be turned into an identification and protection technology for unidentified flying missiles.

It was about ethics too.

Of course, knowing that, you play with words. We all do.

One uses more impactful terms. One uses catchy rhetorical speech figures to boost and anker ones point. One advertises. From the Latin “ad” (direction) and “vertere” (turn)… to turn towards… to divert…

Maybe if Minsky had used “Machine intelligence” or plainly “Machine Power” or “Computer Power” or even “Machine Learning”… would we be discussing different topics now?

« Smart machines » vs “Intelligent machines”

« Computer intelligence » would have been purely scientific. “Computer” is the leading notion. It’s about machines.

Like “smart objects”. There is not much hype around “smart objects” in an IoT context. Or “smart cities”. It flies a bit under the radar. Even though it is worse in terms of connectivity, HMI, human / machine balancing.

The “smart object” has a positive connotation to it. There is nothing invasive at first sight.

However, their four functions are to monitor, control, evaluate, auto-learn. Fully connectible and programmable. Fully hackable.

Sensors and cameras for real-time data-collection. And yet no real uproar by users. It is embraced quite easily. There is no real fear, no real trust issue. Not even a hint towards harm.

(One of the best points in the late AI EU proposal, in my opinion, is that groups like children (through exposure to e-toys) and elderly (through carebots) are explicitely mentioned and cared about.)

So why is there no fuss and fear about “smart objects” ?

Maybe because it is clear that these are “objects”. If they were called “little smart connected brains” it would probably be a different story with all the Alexas surrounding us.

A question of perspective : benevolence vs malevolence

Our brain is not in an “alert modus” with “smart objects”.

Intuitively we believe ourselves capable of controlling the object. Many people are afraid of ghosts or noises or voices but fearless of a tigers or elephants.

In a benevolent context questions are different. They tend to be functional. “What can this object do to help me?”, as in the case of wearables, “How can I use them?”. The approach is one of benevolence.

However, if we talk about AI the wording is “risk-based”, verbs used are “to harm”, “to protect” (like Asimov). The recent EU AI proposal is a shining example of malevolent semantics used for a protective purpose and in a risk-assessment perspective.

The linguistic approach clearly is one of malevolence.

What if ?

If Minsky and his friends had used “machine intelligence”, would we then…

have a benevolent perspective on AI?

Maybe the questions would evolve around “how can AI help, assist, support us? Like crutches, like medecine, like electricity, like fire. It would help us to see, not blind us.

Maybe we would have seen it as a clear way to improve our life, to intelligently integrate AI and create an continuous momentum.

Maybe we would have been quicker in prohibiting and clearly punishing misuse, deepfakes, facial recognition, data thefts, hospital hackings, bias, discriminiation…

Maybe there would be no need to ban and no highest-risk categories in the EU AI proposal for a regulation.

Maybe we would not need ethics, AI ethics, Data ethics because it implicitly would have been obvious that this is not harming us.

Maybe there would not be questions like “Is the machine more human like a person?”, gynoids like Sophia, Erica would clearly be seen as machines, as puppets, as technological prowesses but not as our competitors, as citizens, as future mothers, as spokespeople for female rights (Ai-da, the fembot during its art exhibition).

Maybe there would be less confusion in people’s mind. More consensus amongst professionals. A machine would clearly be seen as a machine. And we would clearly feel in control and prohibit the uncontrollable.

By the way, the Merriam Webster dictionary lists amongst its various definition of artificial the following – “lacking in natural or spontaneous quality” – like an artificial smile.

So are we all victims of an biased algorithm with a faulty input – some mislabed data?

Should it have been labeled “machine intelligence” and not “artificial intelligence”?

- Founder HOUSE OF ETHICS

- Author's Posts

Katja Rausch is specialized in the ethics of new technologies, and is working on ethical decisions applied to artificial intelligence, data ethics, machine-human interfaces and Business ethics.

For over 12 years, Katja Rausch has been teaching Information Systems at the Master 2 in Logistics, Marketing & Distribution at the Sorbonne and for 4 years Data Ethics at the Master of Data Analytics at the Paris School of Business.

Katja is a linguist and specialist of 19th century literature (Sorbonne University). She also holds a diploma in marketing, audio-visual and publishing from the Sorbonne and a MBA in leadership from the A.B. Freeman School of Business in New Orleans. In New York, she had been working for 4 years with Booz Allen & Hamilton, management consulting. Back in Europe, she became strategic director for an IT company in Paris where she advised, among others, Cartier, Nestlé France, Lafuma and Intermarché.

Author of 6 books, with the latest being “Serendipity or Algorithm” (2019, Karà éditions). Above all, she appreciates polite, intelligent and fun people.

-

The proposed concept of swarm ethics evolves around three pilars : behavior, collectivity and purpose

Away from cognitive jugdmental-based ethics to a new form of collective ethics driven by purpose.